翻译自 Tutorial: Use Chroma and OpenAI to Build a Custom Q&A Bot 。

在上一个教程中,我们探讨了 Chroma 作为一个向量数据库来存储和检索嵌入。现在,让我们将用例扩展到基于 OpenAI 和检索增强生成(RAG)技术构建问答应用程序。

在最初为学院奖构建问答机器人时,我们实现了基于一个自定义函数的相似性搜索,该函数计算两个向量之间的余弦距离。我们将用一个查询替换掉该函数,以在Chroma中搜索存储的集合。

为了完整起见,我们将开始设置环境并准备数据集。这与本教程中提到的步骤相同。

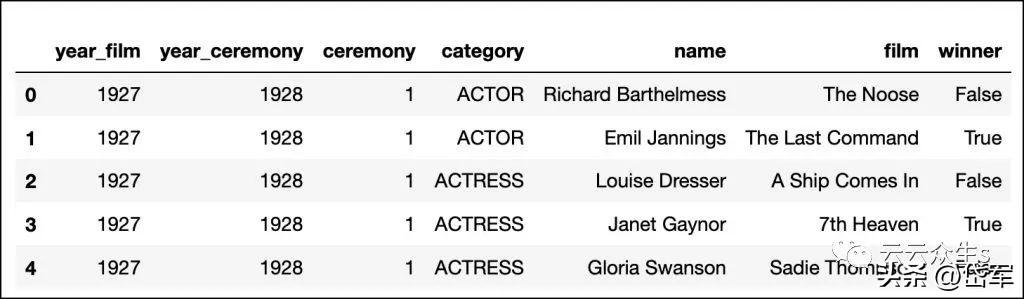

步骤1 - 准备数据集从 Kaggle 下载奥斯卡奖数据集,并将 CSV 文件移到名为 data 的子目录中。该数据集包含 1927 年至 2023 年奥斯卡金像奖的所有类别、提名和获奖者。我将 CSV 文件重命名为 oscars.csv 。

首先导入 Pandas 库并加载数据集:

import pandas as pddf = pd.read_csv('./data/oscars.csv')df.head()

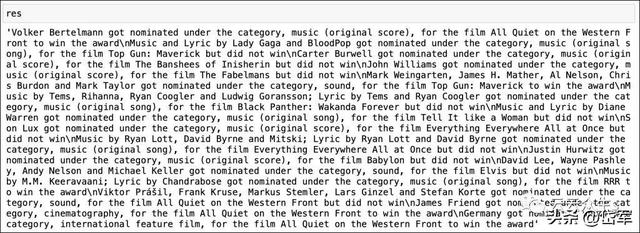

让我们将此列表转换为一个字符串,以为提示提供上下文。

res = "n".join(str(item) for item in results['documents'][0])

是时候根据上下文构建提示并将其发送到OpenAI了。

prompt = f'```{res}```Based on the data in ```, answer who won the award for the original song' messages = [ {"role": "system", "content": "You answer questions about 95th Oscar awards."}, {"role": "user", "content": prompt}]response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=messages, temperature=0)response_message = response["choices"][0]["message"]["content"]

响应包括基于上下文和提示的组合得出的正确回答。

本教程演示了如何利用诸如 Chroma 之类的向量数据库来实现检索增强生成(RAG),以通过额外的上下文增强提示。

以下是完整的代码,供您探索:

import pandas as pdimport openaiimport chromadbfrom chromadb.utils import embedding_functionsimport osdf=pd.read_csv('./data/oscars.csv')df=df.loc[df['year_ceremony'] == 2023]df=df.dropna(subset=['film'])df['category'] = df['category'].str.lower()df['text'] = df['name'] ' got nominated under the category, ' df['category'] ', for the film ' df['film'] ' to win the award'df.loc[df['winner'] == False, 'text'] = df['name'] ' got nominated under the category, ' df['category'] ', for the film ' df['film'] ' but did not win'def text_embedding(text) -> None: response = openai.Embedding.create(model="text-embedding-ada-002", input=text) return response["data"][0]["embedding"]openai_ef = embedding_functions.OpenAIEmbeddingFunction( api_key=os.environ["OPENAI_API_KEY"], model_name="text-embedding-ada-002" )client = chromadb.Client()collection = client.get_or_create_collection("oscars-2023",embedding_function=openai_ef)docs=df["text"].tolist() ids= [str(x) for x in df.index.tolist()]collection.add( documents=docs, ids=ids)vector=text_embedding("Nominations for music")results=collection.query( query_embeddings=vector, n_results=15, include=["documents"])res = "n".join(str(item) for item in results['documents'][0])prompt=f'```{res}```who won the award for the original song'messages = [ {"role": "system", "content": "You answer questions about 95th Oscar awards."}, {"role": "user", "content": prompt}]response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=messages, temperature=0)response_message = response["choices"][0]["message"]["content"]print(response_message)

相关文章

猜你喜欢